The Rise of Domain-Specific AI Models in 2026

For the last few years, the AI conversation has been dominated by size. Bigger models. More parameters. More data. More compute. The assumption was simple: the larger the model, the smarter it must be.

That assumption is starting to break.

As enterprises move from experimentation to real production workloads, a quieter shift is taking place. Instead of betting everything on massive, general-purpose models, companies are increasingly turning to domain-specific AI models — smaller systems trained deeply on a narrow slice of knowledge.

By 2026, many experts expect these DSLMs to outperform general models for most enterprise use cases. Not because they’re more powerful in theory, but because they’re more practical in reality.

Why General Models Are Hitting Enterprise Limits

Large, general AI models are impressive. They can write, reason, translate, summarize, and answer across a wide range of topics. But that breadth comes with tradeoffs that enterprises quickly feel once systems move into production.

First, there’s cost. Large models are expensive to run, especially at scale. Inference costs add up fast when models are used across customer support, internal tools, analytics, and automation workflows.

Second, there’s control. General models are trained on broad, mixed datasets. For regulated industries — finance, healthcare, legal, and manufacturing — this creates governance headaches. Explaining why a model made a decision, or proving compliance, becomes difficult.

Third, there’s signal-to-noise. When a model knows “a little about everything,” it often knows “not enough about one thing.” Enterprises don’t need AI that can write poetry and code. They need AI that deeply understands their contracts, their processes, and their data.

This is where domain-specific models start to shine.

What Makes Domain-Specific Models Different

A domain-specific AI model is trained or fine-tuned on a narrow, high-quality dataset tied to a particular industry, function, or workflow. Instead of trying to understand the entire world, it becomes extremely good at understanding one part of it.

That focus changes everything.

Because DSLMs are smaller, they’re cheaper to run and easier to deploy closer to the data. Because they’re trained on curated domain knowledge, they’re more accurate within that domain. Because their scope is limited, they’re easier to test, explain, and govern.

In many cases, a well-trained DSLM can outperform a general model on specific tasks not because it’s smarter overall, but because it’s less distracted.

Where Enterprises Are Already Seeing Value

This shift is already visible across industries.

In healthcare, models trained specifically on clinical notes, radiology reports, or medical coding outperform general models when it comes to accuracy and explainability. In finance, DSLMs trained on transaction data, regulatory language, and internal risk models are proving more reliable for fraud detection and compliance tasks.

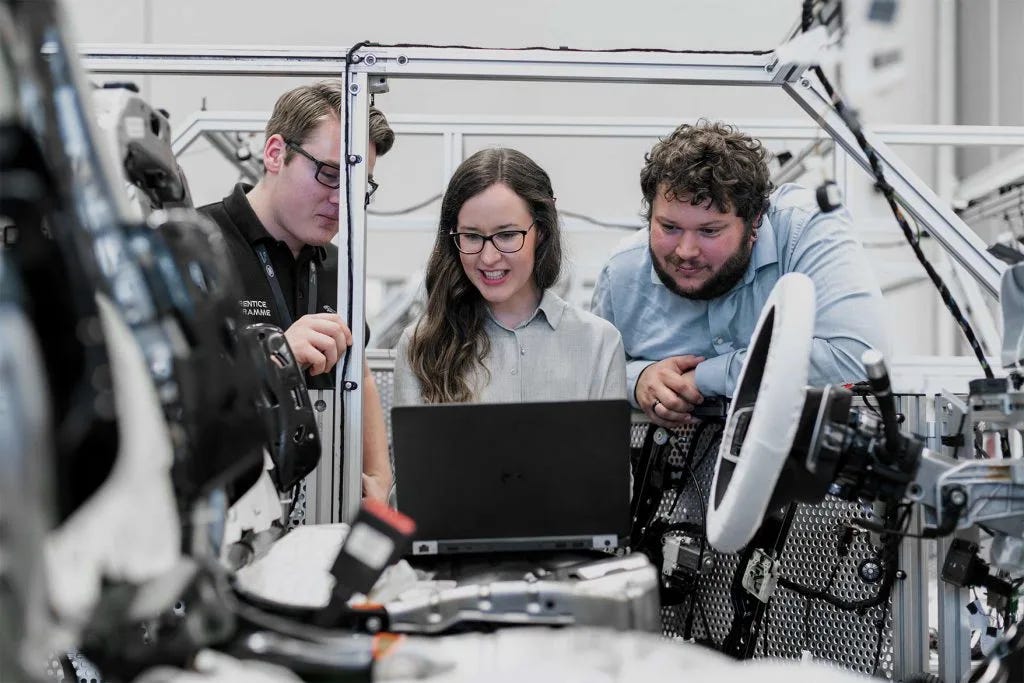

Manufacturing companies are building models that understand machine telemetry and maintenance logs. Legal teams are deploying models trained exclusively on case law, contracts, and internal precedents. Customer support teams are using DSLMs grounded in product documentation and historical tickets instead of generic chatbots.

In each case, the value isn’t novelty; it’s trust and performance.

Efficiency, Governance, and Interpretability

One of the most compelling arguments for DSLMs is governance.

Smaller models are easier to audit. Their training data can be documented precisely. Their behavior is more predictable. When something goes wrong, teams can trace it back to specific inputs or assumptions.

This matters as AI regulations evolve and internal risk teams demand clearer accountability. It’s much easier to defend a system that only knows what it’s supposed to know than one trained on the entire internet.

There’s also a practical infrastructure advantage. DSLMs can often run on smaller GPUs, CPUs, or even at the edge. That reduces latency, improves privacy, and lowers operational costs — all critical factors for enterprise adoption.

Why 2026 Will Be the Inflection Point

The tooling ecosystem is rapidly catching up.

Frameworks for fine-tuning, retrieval augmentation, and model orchestration are making it easier to build and maintain DSLMs. Open-source foundation models provide strong starting points. Enterprises are realizing they don’t need to train from scratch — they need to specialize intelligently.

At the same time, AI workloads are moving closer to business logic. Instead of calling one giant model for everything, systems are starting to route tasks to the right model for the job. A general model might still orchestrate, or reason at a high level, but domain models do the real work.

By 2026, this multi-model architecture, general + domain-specific, is likely to become the default.

The Risk of Sticking to One-Size-Fits-All

Companies that rely exclusively on large, generic models may find themselves at a disadvantage.

They’ll pay more to get less precision. They’ll struggle with explainability. They’ll face resistance from compliance and security teams. And as competitors deploy faster, cheaper, and more accurate DSLMs, the gap will widen.

The risk isn’t that general models disappear. It’s that they stop being enough on their own.

How 0xMetalabs Approaches Domain-Specific AI

At 0xMetalabs, we help teams move from “AI experiments” to AI systems that actually fit the business.

That often means identifying where domain specificity creates the most leverage, high-volume workflows, regulated decisions, or areas where accuracy matters more than creativity. From there, we help design architectures where DSLMs integrate cleanly with existing systems, data pipelines, and governance processes.

The goal isn’t to replace general models. It’s to use them where they make sense and deploy domain-specific models where precision, cost, and trust matter more.

Final Thought

The future of enterprise AI isn’t bigger models. It’s better-aligned models.

As organizations mature in their AI adoption, they’re realizing that intelligence isn’t about knowing everything. It’s about knowing the right things deeply.

Domain-specific AI models represent that shift. And by 2026, one-size-fits-all won’t be the default anymore; it’ll be the exception.

You May Also Like

How AI Reasoning Models are Revolutionising decision-making in 2025

Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo con

The Hidden World of AI: Prompt Engineering and Jailbreaking

Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo con

How Legacy Systems are Killing Companies

Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo con